I had a maths-related nightmare that (initially) was concerned about counting solutions of a linear equation modulo with integral coefficients. Though the whole detail of the dream cannot be written down due to privacy reasons, at least I can write a simple introduction on solving this specific problem here.

Update on 26 December 2018: The original solution is wrong. See the correction. Another update the same day: The original method is indeed correct, see the correction to correction.

In the dream, someone modelled the possible versions of wicked nursery rhymes as solutions to linear equations modulo , and asked how to count the solutions. Write the equations as a matrix equation where . The objective is to count the solutions (and possibly represent them in an easy-to-enumerate way).

Smith normal form of matrix over PID

If you are familiar with structural theorem of finitely generated module over a principal ideal domain, or -matrices (used for determining whether two matrices are similar), or just Smith normal form, you can skip this part.

Recall the elementary reductions for matrices over a field. Row reductions represent manipulations of equations. Rarely used in solving linear equations are column reductions, which represent change of variables. The implication of elementary reductions is that any invertible matrix is a product of several elementary matrices. Moreover, for any matrix (over a field), there exist two invertible matrices and a natural number , such that where is the identity matrix. As we all know, is the rank of the matrix.

The similar thing for the second part goes for matrices over a PID (or more generally, a principal ideal ring or a Bézout domain). For any matrix , there exist invertible matrices , a natural number and ring elements such that for and The counterpart of the first part, that invertible matrices are products of ‘elementary matrices’, holds for Euclidean domains. It holds for general Bézout domain if we allow a new kind of elementary matrices, ‘coprime combination’, which essentially means multiplying by for any for two selected rows (resp. columns). Including this kind of elementary matrices generate the general linear group. Read the Wikipedia article for Smith normal form for an example.

The ’s appearing on the diagonal are called invariant factors, which are unique up to associatedness (multiplication by a unit). The prime factorisation of them corresponds to elementary divisors. The invariant factors can be determined by the quotients of successive determinant factors, the -th of which is a greatest common divisor of all minors.

Solving the equation

Smith normal form answers the question in the nightmare. Recall that we want to count the solutions (and write them in an easy-to-enumerate way) of First find out the Smith normal form of as You can use the Smith normal form of as a matrix over either or , and for the latter case, it is possible to write (the integer representatives of the representatives of) the invariant factors as a positive divisor of , which happen to be the greatest common divisors of the corresponding factors over and (see here). Note that for the former case, are invertible over , hence are also invertible over (when naturally mapped via the quotient map). The equation can be rewritten as Since is invertible, is a bijection over . The problem reduces to counting the solutions of . This is a diagonal system of equations, of which the number of solutions is a piece of cake — the product of the number of solutions to each single equation. Consider the equation where is a positive divisor of . There are solutions if , which are Otherwise, there is no solution. Finally, any solution of the original equation is just , where is a solution to the diagonal system.

Correction

The strikethrough texts above are wrong. Each equation

where might not be (representable by) a factor of , by Chinese remainder theorem (CRT), is equivalent to the system

where runs through prime powers such that and . Multiplied by appropriate coefficients, they can be rewritten as

where is the maximum prime power dividing yet below or equal to . The solutions to each equation are, this time for real, easy. If , there are solutions, namely

Otherwise, there is none. Each component in the diagonal system can be combined from the corresponding subset of the expanded system by CRT, which gives a formula to enumerate the solutions. If we just want to count, we can just multiply the number of solutions of each modulo-prime-power equation.

Remarks. CRT can be invoked before or after invariant factorisation. Doing so in the later stage allows reusing the factorisation over or .

Correction to Correction

I thought the part about choosing as a divisor of was wrong, but it turns out it is correct. Moreover, we can pretend to be some of the invariant factors that follow non-zero entries (as is just zero in ), which unifies the way we write equations. The rewriting isn’t quite immediate. If you read carefully, is not a principal ideal domain, though matrices still admit an (and even very nice) invariant factorisation. Let’s slow down and look carefully how we could reach the factorisation we need.

Let be the standard factorisation, where are distinct primes. For any , factorise , where . Let and , then . Now CRT guarantees some such that Note this is invertible modulo (because it’s invertible in each equation in CRT), let an inverse be . Note that which, again by CRT, yields . This means any non-zero number is associated with (a unit factor away from) in .

Now we turn to invariant factorisation of matrices over . First, find out the invariant factorisation of over as . For each diagonal entry , make it positive by adding a multiple of , then invoke the above result and do one more row reduction (multiplying a unit) to transform so that each diagonal entry is a factor of , whence becomes .

Remarks. The above method isn’t more desirable (for human; the time to find out the invariant factors dominates the total running time) than the one in the first correction, because it, after all, has to go through CRT to find out , which involves breaking equations down to the prime powers and putting them back together. An obvious attempt of fix would be using Euclidean algorithm to find out Bézout’s identity . However, this method (without extra guarantees on the result returned) might fail, e.g., , but .

Remarks. However, the above method is faster (for human) for counting. Since multiplying by doesn’t affect the prime powers in that are relevant (i.e., also primes whose powers appearing in ), we can just test whether and conclude the count.

Anecdotes

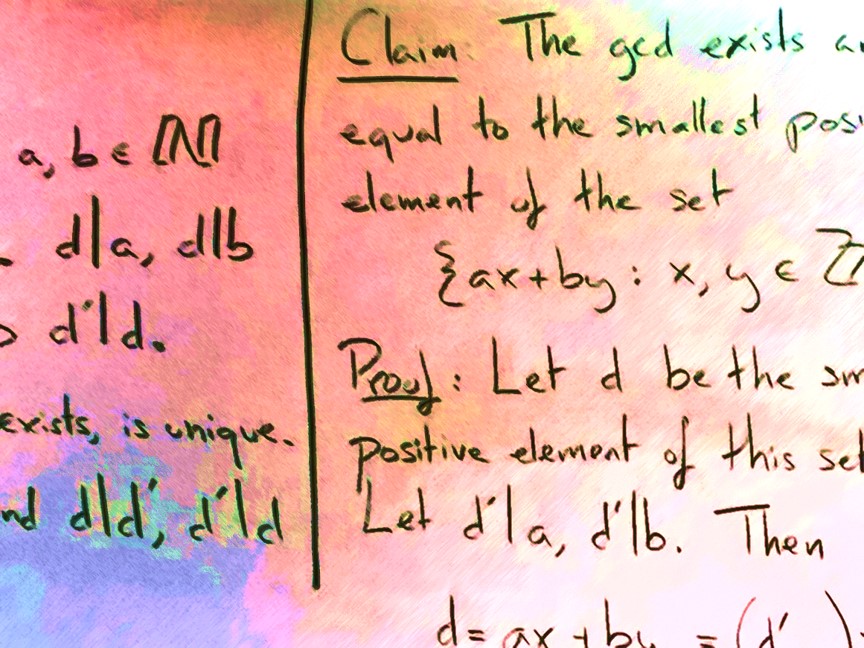

- I dug through my camera roll to find a photo for the hero image so that I don’t have to deal with copyright issues. The photo is a picture of John Steinberger’s lecture of Mathematics in Computer Science (before those fancy artistic effects), and it is chosen based on its content relevance. The picture demonstrates the Bézout’s identity. Just to clarify, the lecture wasn’t a nightmare.

- My blog building system is updated for this entry so that I can draw horizontal lines inside matrices.

- Now that I have to revise twice after the entry’s original publication, this really is a nightmare!

Please enable JavaScript to view the comments powered by Disqus.